Music is energy in the form of vibration. Capturing that energy and playing it back as faithfully as possible is what the audio industry is all about. Our sole mission at KEF is to reproduce the energy sent to our speakers from whatever source, as faithfully and as accurately as the source allows. Upstream from the actual loudspeaker itself, the world of music has gone digital.

The tech that makes our music so easily available to us may be complicated, but getting at least a passing knowledge of it all isn’t. With that in mind, here is your Digital Music Primer:

The tech that makes our music so easily available to us may be complicated, but getting at least a passing knowledge of it all isn’t. With that in mind, here is your Digital Music Primer:

Human voices and natural instruments like pianos, guitars, drums, and the like all reside in the analogue domain. From the 1880s on, we recorded and stored music through the use of devices that would transfer the vibrations of the musical energy onto a medium that was easily manipulated by these vibrations, including wax cylinders, acetate record albums, and magnetic recording tape.

In today’s world, these vibrations are captured digitally, allowing us to take whatever music we love, in whatever quantities we choose, anywhere we go. Musical performances are now converted to a series of digital bits. From there, it will remain a giant mass of organized 1s and 0s until you convert it back to analogue to listen to it.

After we record our analogue performance, we send our recordings through a circuit called an Analogue-to-Digital Converter (ADC). Once we've digitised the music via the ADC, we move the 1s and 0s into a digital file. When we get ready to listen back, we un-digitise it by reversing the process through a Digital-to-Analogue Converter (DAC). That analogue signal is then sent through our amp to our speakers.

The ADC samples the frequency (and amplitude) of the signal at a set rate and then converts that sample to digital information (binary 1s and 0s), and is then stored as a digital file.

Take whatever song you’re thinking of right now and freeze a single slice of it in time – that’s what an ADC does. The resolution of the sampled music comes from what we do with this singular slice of time.

The number of samples we take of this singular second of music is called the sampling rate. With Red Book CD (the standard for CDs) we take 44,100 samples of this singular slice of music per second, equating to a sampling rate of 44.1kHz. Obviously, the more frequently you sample, the more information you retain, so it stands to reason that a sample rate of 96kHz (considered the lower limit of high resolution) will sound better than 44.1kHz, but not as good as 192kHz.

In today’s world, these vibrations are captured digitally, allowing us to take whatever music we love, in whatever quantities we choose, anywhere we go. Musical performances are now converted to a series of digital bits. From there, it will remain a giant mass of organized 1s and 0s until you convert it back to analogue to listen to it.

After we record our analogue performance, we send our recordings through a circuit called an Analogue-to-Digital Converter (ADC). Once we've digitised the music via the ADC, we move the 1s and 0s into a digital file. When we get ready to listen back, we un-digitise it by reversing the process through a Digital-to-Analogue Converter (DAC). That analogue signal is then sent through our amp to our speakers.

The ADC samples the frequency (and amplitude) of the signal at a set rate and then converts that sample to digital information (binary 1s and 0s), and is then stored as a digital file.

Take whatever song you’re thinking of right now and freeze a single slice of it in time – that’s what an ADC does. The resolution of the sampled music comes from what we do with this singular slice of time.

The number of samples we take of this singular second of music is called the sampling rate. With Red Book CD (the standard for CDs) we take 44,100 samples of this singular slice of music per second, equating to a sampling rate of 44.1kHz. Obviously, the more frequently you sample, the more information you retain, so it stands to reason that a sample rate of 96kHz (considered the lower limit of high resolution) will sound better than 44.1kHz, but not as good as 192kHz.

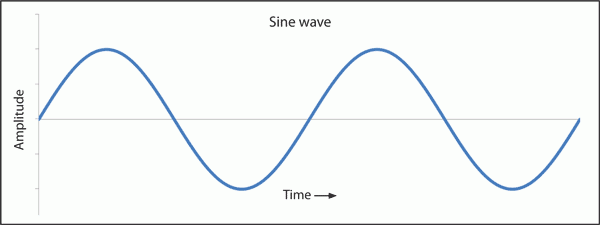

If we look at the sine wave below, which represents an audio signal, the y-axis (top to bottom) is amplitude or volume, and the x-axis (left to right) is frequency or time.

To convert the signal to digital, we assign a digital number to pre-determined sections on the y-axis, this is the word size. Common word sizes in audio are 16- and 24--bit. Then we take samples of the signal along the x-axis. The amount of times we sample is called the sample rate, (the more we sample the better the sound).

In the digital domain we can store the information on a CD, hard-drive or the Cloud (which is really just a hard-drive someone else owns). Magnetic tape and vinyl records are simply analog storage devices.

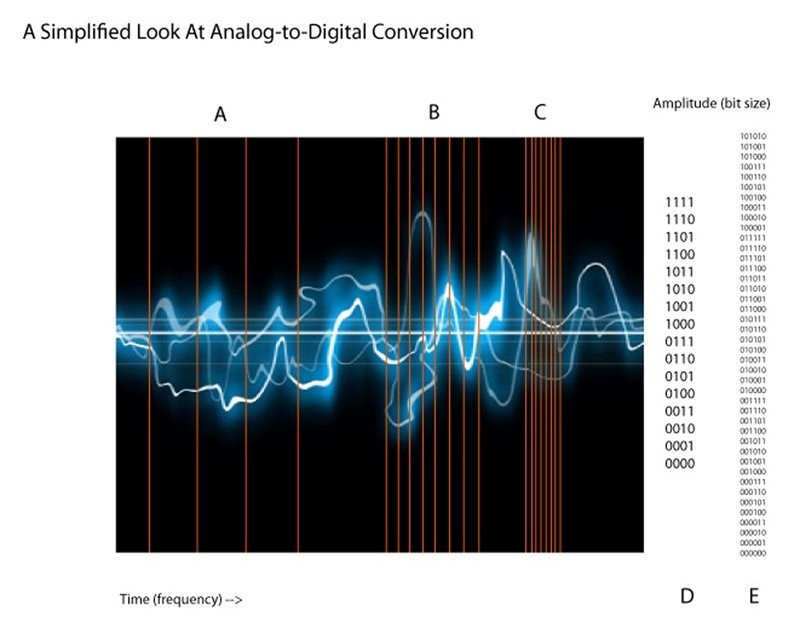

The illustration below simplifies the conversion process by using relative points of reference to make the point. It is not meant to be an accurate, mathematical depiction of analog-to-digital conversion.

In the digital domain we can store the information on a CD, hard-drive or the Cloud (which is really just a hard-drive someone else owns). Magnetic tape and vinyl records are simply analog storage devices.

The illustration below simplifies the conversion process by using relative points of reference to make the point. It is not meant to be an accurate, mathematical depiction of analog-to-digital conversion.

The vertical red lines represent the sample rate.

The lines at A show a very slow sample rate, which leaves out a lot of the audio signal. For example, lower sample rates are used with telephones and hand-held radios where a very limited frequency range (the human voice) is sampled.

B represents a medium-sample rate. For this demonstration, we could say this is a sample rate of 44.1kHz, and C would represent a very high sample rate used for professional applications. The sampling standard for CDs and commercial audio started at 44.1kHz, but 48kHz is now considered the standard minimum sample rate.

The lines at A show a very slow sample rate, which leaves out a lot of the audio signal. For example, lower sample rates are used with telephones and hand-held radios where a very limited frequency range (the human voice) is sampled.

B represents a medium-sample rate. For this demonstration, we could say this is a sample rate of 44.1kHz, and C would represent a very high sample rate used for professional applications. The sampling standard for CDs and commercial audio started at 44.1kHz, but 48kHz is now considered the standard minimum sample rate.

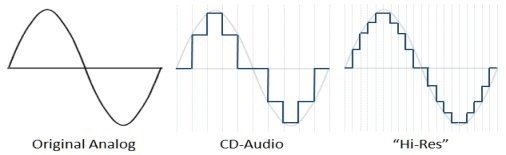

But the sample rate isn't the only thing we should be concerned about. Word length is actually more important. Basically, we're converting all of our wonderful analogue music to 1s and 0s, so it stands to reason that the more 1s and 0s we have, the more detail we capture and therefore, the higher the sound quality when we listen back. It’s not just the number of samples we take, but the amount of that information we store that determines the sound quality of a digitised song. That’s where bit depth comes in – the deeper the bit depth (the larger the data word), the higher the resolution.

Red Book CD uses a bit depth of 16-bits. The broadcast video standard for audio, as well as files considered high resolution is 24-bits. Dynamics are particularly affected by bit depth, and by adding those extra 8-bits of data, our ability to maintain the dynamics of a musical passage is greatly enhanced.

The 1s and 0s at the right of the illustration represent the digital record of the sampled amplitude. The sample is taken at a specific time, and the amplitude of the signal at that time is stored as data. D represents a small word of four bits in length. You can see that with only four bits of data, we are not able to capture a lot of amplitude data, irrespective of the number of times we take a sample. With four bits, we are only able to capture 16 different data points.

The numbers in column E represent a 6-bit word. With 6 bits, we are able to capture 64 data points, so by simply adding two more bits, we are able to capture four times the information. The larger our word, the more detailed our sample storage; the higher our sample rate, the more audio signal we can capture. In the real world, a 16-bit word is able to capture 65,536 separate data points, but by simply adding 8 more bits, our 24-bit word can now sample 16,777,216 data points – or 256 times the data captured with 16-bits.

The trade-off is file size. When we increase the resolution of the file from 16- to 24-bits, we also increase the size of the file by a factor of 32. When storage was expensive and limited, a lower resolution was necessary in order to store any usable amount of music. But with the exception of your phone, storage has gotten inexpensive and quite expansive, which allows us to store more decent-sounding music than ever before.

That’s where digital compression comes into play. To fit as much music in as small a space as possible, we learned how to compress the digital file – basically by removing data that is not absolutely necessary to the coherence of the song. The basic notes and sounds will still be there, but the life and dynamics are removed. Through ear buds or computer speakers, you’ll probably not notice anything missing, but when you listen on a better-quality system, you will definitely notice the missing bits.

All of this has absolutely nothing to do with bit rate, which is the speed at which we digitally transmit information. Bit-rate is the capacity of a digital transmission system to transmit data. The higher the bit-rate, the more cohesive your music or video will be when you are streaming. That digital transmission system might be the software you use to rip your CDs, the speed of your local network when you stream music, or any of the several digital transmissions that take place in the digital music stream.

For example, Spotify bitrates for Android, iOS, desktop devices, and the app, and is listed at 320kbps for Premium users. For Chromecast users, the bitrate dips to 256kbps. Is there a difference in quality? Yes. Is that difference (~70kbps) noticeable? Maybe.

Bluetooth APTx has a potential bitrate of 325kbps (depending on the source). Apple AirPlay bitrates under the AAC format they use, which are capped at 250kbps. On a high-quality system, the difference will be noticeable and quite annoying to listen to, but as you approach mid-level systems and lower, that difference becomes harder to discern. But there are still several other factors to consider when we talk about the quality of digital music.

To sum all of this up, let’s look at it this way: the higher the number, the better the listening experience. A 96kHz sample rate is better than a 48.1kHz sample rate, but not as good as 192kHz. A word length of 24-bits is far superior to a word length of 16-bits, (it’s an exponential increase in quality – literally). A bit-rate of 250kbps is generally not as good as a bit-rate of 320kbps.

This all boils down to one thing – if it sounds good to you, then great, but never limit yourself to what you’re used to or comfortable with. Science has given us the incredible gift of amazing-sounding music at quite literally the click of a mouse button – it’s all there for the taking.

Red Book CD uses a bit depth of 16-bits. The broadcast video standard for audio, as well as files considered high resolution is 24-bits. Dynamics are particularly affected by bit depth, and by adding those extra 8-bits of data, our ability to maintain the dynamics of a musical passage is greatly enhanced.

The 1s and 0s at the right of the illustration represent the digital record of the sampled amplitude. The sample is taken at a specific time, and the amplitude of the signal at that time is stored as data. D represents a small word of four bits in length. You can see that with only four bits of data, we are not able to capture a lot of amplitude data, irrespective of the number of times we take a sample. With four bits, we are only able to capture 16 different data points.

The numbers in column E represent a 6-bit word. With 6 bits, we are able to capture 64 data points, so by simply adding two more bits, we are able to capture four times the information. The larger our word, the more detailed our sample storage; the higher our sample rate, the more audio signal we can capture. In the real world, a 16-bit word is able to capture 65,536 separate data points, but by simply adding 8 more bits, our 24-bit word can now sample 16,777,216 data points – or 256 times the data captured with 16-bits.

The trade-off is file size. When we increase the resolution of the file from 16- to 24-bits, we also increase the size of the file by a factor of 32. When storage was expensive and limited, a lower resolution was necessary in order to store any usable amount of music. But with the exception of your phone, storage has gotten inexpensive and quite expansive, which allows us to store more decent-sounding music than ever before.

That’s where digital compression comes into play. To fit as much music in as small a space as possible, we learned how to compress the digital file – basically by removing data that is not absolutely necessary to the coherence of the song. The basic notes and sounds will still be there, but the life and dynamics are removed. Through ear buds or computer speakers, you’ll probably not notice anything missing, but when you listen on a better-quality system, you will definitely notice the missing bits.

All of this has absolutely nothing to do with bit rate, which is the speed at which we digitally transmit information. Bit-rate is the capacity of a digital transmission system to transmit data. The higher the bit-rate, the more cohesive your music or video will be when you are streaming. That digital transmission system might be the software you use to rip your CDs, the speed of your local network when you stream music, or any of the several digital transmissions that take place in the digital music stream.

For example, Spotify bitrates for Android, iOS, desktop devices, and the app, and is listed at 320kbps for Premium users. For Chromecast users, the bitrate dips to 256kbps. Is there a difference in quality? Yes. Is that difference (~70kbps) noticeable? Maybe.

Bluetooth APTx has a potential bitrate of 325kbps (depending on the source). Apple AirPlay bitrates under the AAC format they use, which are capped at 250kbps. On a high-quality system, the difference will be noticeable and quite annoying to listen to, but as you approach mid-level systems and lower, that difference becomes harder to discern. But there are still several other factors to consider when we talk about the quality of digital music.

To sum all of this up, let’s look at it this way: the higher the number, the better the listening experience. A 96kHz sample rate is better than a 48.1kHz sample rate, but not as good as 192kHz. A word length of 24-bits is far superior to a word length of 16-bits, (it’s an exponential increase in quality – literally). A bit-rate of 250kbps is generally not as good as a bit-rate of 320kbps.

This all boils down to one thing – if it sounds good to you, then great, but never limit yourself to what you’re used to or comfortable with. Science has given us the incredible gift of amazing-sounding music at quite literally the click of a mouse button – it’s all there for the taking.

Find My Retailer

Find out more about our award-winning models and book a demo at your nearest KEF retailer.

FIND MY RETAILER